In the late 20th century, computing power grew at an astonishing pace. Every year, CPUs become faster, smaller, and more efficient. Clock speeds climbed steadily—from a few megahertz in the early 1980s to over 3 GHz by the mid-2000s. Moore’s Law, which predicted the doubling of transistors approximately every two years, seemed unstoppable. But something unexpected happened around 2005, the meteoric rise of CPU speeds suddenly stalled. This plateau puzzled tech enthusiasts and industry veterans alike.

CPU speed started to slow down around 2005. Before that, computer chips were getting faster every year. But after 2005, it became hard to make CPUs run much faster because of heat and power problems. Instead of just increasing speed, chip makers started adding more cores to do many tasks at once. So, while speed didn’t grow as much, performance still improved in other ways. Today, CPUs focus more on energy use and multitasking than just speed.

In this article, we’ll explore the timeline of CPU speed evolution, the reasons behind the plateau, and how the industry responded. We’ll also look ahead to see where computing performance is heading.

The Early Years: When Speed Was King

From the 1970s through the early 2000s, CPU development was largely driven by increasing clock speeds. Early processors like Intel’s 8086 ran at just 5 MHz. By the time Pentium III was released in 1999, clock speeds had reached over 1 GHz. In just two decades, CPU frequencies grew nearly 200 times.

Notable milestones include:

- Intel 80386 (1985): 16–40 MHz

- Pentium MMX (1996): Up to 233 MHz

- Pentium III (1999): Hit 1 GHz

- Pentium 4 (2004): Reached 3.8 GHz

This era saw consistent gains in both frequency and performance. For consumers, a new PC every 2–3 years meant dramatically faster experiences.

The Plateau Begins: Around 2005

The trend of increasing CPU speed hit a wall around 2005. Intel’s Pentium 4, with its NetBurst architecture, was supposed to scale up to 10 GHz. Instead, it ran into major limitations at just under 4 GHz. Heat and power consumption became unmanageable.

While minor gains in frequency continued, they were far less dramatic. Today, most CPUs still hover between 3.0 and 4.5 GHz. In terms of raw clock speed, not much has changed in nearly two decades.

Why Did CPU Speed Plateau?

The primary reasons behind the CPU speed plateau are rooted in physics and engineering limitations:

1. Heat Dissipation

As clock speed increases, so does power consumption. This results in excessive heat. CPUs require cooling systems to maintain stable temperatures. At high frequencies, even advanced cooling solutions struggle.

For example, doubling the frequency roughly quadruples power usage due to increased switching activity. Without groundbreaking thermal technologies, pushing CPUs beyond 4–5 GHz is highly inefficient.

2. Power Consumption and Efficiency

High-speed processors consume more energy. This makes them unsuitable for mobile and embedded systems where battery life is critical. Engineers needed to find better ways to increase performance without draining power.

3. Diminishing Returns

At a certain point, increasing clock speed brings minimal benefits. Performance depends on many factors, including architecture, memory access, and parallelism. Simply increasing the GHz no longer provided proportional gains.

4. The End of Dennard Scaling

Dennard scaling proposed that as transistors shrink, their power density remains constant. This allowed chips to get faster without overheating. But by the mid-2000s, scaling began to fail. Transistor leakage and heat buildup limited further clock speed increases.

Also Read: Why Is My CPU Fan So Loud – A Comprehensive Guide Of 2025!

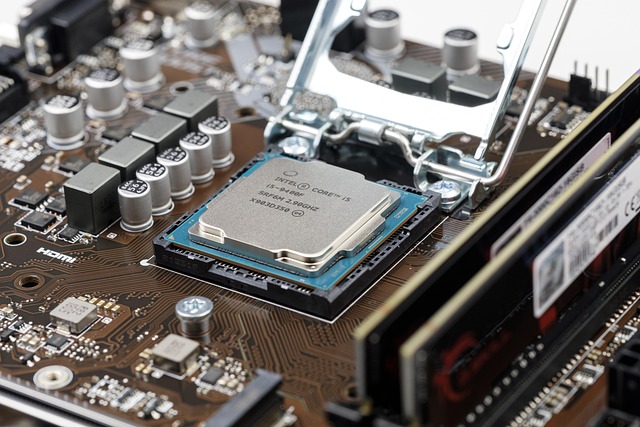

The Shift to Multi-Core CPUs

To bypass these physical limits, chipmakers pivoted. Rather than pushing a single core to go faster, they began adding more cores.

How Multi-Core Works

A multi-core CPU includes several processing units (cores) within a single chip. These cores can handle separate tasks simultaneously, improving multitasking and parallel processing.

This shift marked a new era in computing:

- Dual-core chips appeared in mainstream PCs around 2005.

- Quad-core CPUs became common by 2007–2008.

- Today, 8-core, 12-core, and even 64-core CPUs are available for desktops, servers, and workstations.

Benefits of Multi-Core CPUs

- Better multitasking performance

- Improved energy efficiency

- Enhanced workloads in rendering, video editing, gaming, and AI

- Continued performance scaling without higher GHz

Also Read: 92 Celsius CPU Doing Nothing Intensive On Laptop…

The Role of Software Optimization

Hardware improvements alone aren’t enough. Software also needed to evolve to take advantage of multiple cores.

Multithreaded Applications

Developers started creating applications capable of running in parallel. Modern operating systems and software, such as Adobe Premiere Pro, AutoCAD, and even web browsers, now utilize multiple threads.

Gaming Performance

Game engines were initially built for single-threaded performance. Today, AAA titles like Cyberpunk 2077 and Call of Duty fully leverage multi-core processors for better frame rates and smoother gameplay.

Beyond GHz: Architectural Improvements

While clock speed has plateaued, CPU performance has not. Modern processors are far superior to their early 2000s counterparts, thanks to advanced microarchitectures.

Key Architectural Innovations Include:

- Smaller Transistor Nodes: From 65nm in 2006 to 3nm in 2024

- Hyper-Threading (Intel) / SMT (AMD): One core handles multiple threads

- Better Cache Management: Faster data access and lower latency

- Out-of-Order Execution: More efficient instruction handling

- Branch Prediction: Reduces processing delays

These changes allow modern CPUs to do more with the same or lower clock speeds.

The ARM Revolution and Apple Silicon

In recent years, Apple’s M1, M2, and M3 chips based on ARM architecture have disrupted the market. These chips focus on performance-per-watt rather than raw clock speed.

Why Apple’s Chips Matter

- High efficiency with lower power consumption

- Integrated GPU and neural engine for machine learning

- Unified memory architecture for faster data access

Despite running at lower frequencies, Apple Silicon outperforms many high-GHz Intel and AMD CPUs in real-world tasks.

AI Acceleration and Specialized Cores

AI workloads are growing fast. To keep up, CPUs now integrate specialized components:

What’s Changing:

- Neural Processing Units (NPUs): Boost AI performance

- Integrated GPUs: Handle graphics and parallel computation

- Tensor Cores (in GPUs): Accelerate machine learning tasks

These changes reduce the need to rely solely on CPU speed. Tasks like image recognition or large language model inference can run faster using dedicated hardware.

The Future: What Comes After the Plateau?

As clock speed remains limited, what lies ahead?

1. Heterogeneous Computing

Future chips will mix different types of cores—high-performance, high-efficiency, and specialized AI cores—on one die. Examples include Intel’s Alder Lake and Apple’s M-series chips.

2. Chiplets and Modular Design

AMD’s Ryzen and EPYC CPUs use chiplet-based architecture. Instead of one monolithic die, they combine smaller units. This allows better yields, modular scaling, and flexible performance boosts.

3. Quantum Computing

Still experimental, quantum computers could revolutionize computation. They use quantum bits (qubits) instead of binary logic, enabling exponential parallelism. While not replacing CPUs yet, they promise breakthroughs in science and cryptography.

4. Optical and Neuromorphic Computing

Researchers are exploring optical processors and brain-like computing models. These systems aim to overcome thermal and speed limitations of traditional silicon.

Why GHz Still Matters, But Not Alone?

Clock speed still has value. In many single-threaded applications, higher GHz improves performance. However, it is no longer the sole indicator of CPU power.

Today’s performance depends on:

- Number of cores and threads

- Cache size

- Memory speed and latency

- Integrated AI and GPU acceleration

- Software optimization

The industry now focuses on balanced, efficient, and intelligent computing.

Does CPU speed decrease over time?

CPU speed does not inherently decrease over time, but performance may seem slower due to software updates, increased system demands, or overheating. Dust buildup, aging thermal paste, and worn-out cooling systems can cause thermal throttling, which reduces speed to prevent damage.

While the actual clock speed remains the same, these factors can lead to perceived slowness, making it seem like the CPU is degrading when it’s actually the surrounding conditions affecting performance.

What was the average CPU speed in 1998?

In 1998, the average CPU speed ranged between 300 MHz and 450 MHz. Popular processors at the time included Intel’s Pentium II and AMD’s K6 series.

These CPUs marked a significant step forward in performance compared to previous years, but they were still vastly underpowered compared to today’s multi-gigahertz processors. The computing demands were also much lower back then, so these speeds were sufficient for everyday tasks such as browsing, word processing, and basic gaming.

Is a 3.0 GHz processor 50% faster than a 2.0 GHz processor?

Not necessarily. While 3.0 GHz is 50% more in frequency than 2.0 GHz, performance depends on other factors like CPU architecture, number of cores, and instruction-per-clock (IPC) efficiency.

A newer 2.0 GHz processor may outperform an older 3.0 GHz CPU due to design improvements. Clock speed alone doesn’t determine actual performance; it’s one piece of a larger puzzle involving how efficiently the processor handles data and executes tasks.

Why does CPU clock speed drop?

CPU clock speed can drop due to thermal throttling, power-saving settings, or inadequate cooling. Modern CPUs dynamically adjust their speeds to manage power consumption and temperature.

When a CPU gets too hot, it lowers its speed to prevent overheating and potential damage. Similarly, laptops often reduce CPU speeds on battery power to extend battery life. Background tasks, outdated drivers, or BIOS settings can also trigger unexpected clock speed drops during operation.

Conclusion: CPU Speed Plateaued, But Progress Didn’t

The clock speed plateau began around 2005. Since then, CPUs have evolved dramatically in other ways. Instead of chasing GHz, chipmakers now prioritize core count, architecture, energy efficiency, and intelligent processing.

We’ve entered an age where raw speed matters less than how efficiently a processor handles complex, diverse tasks. The GHz race may have slowed, but computing innovation continues full throttle.

FAQs:

1. Why did CPU clock speeds stop increasing after 2005?

CPU clock speeds plateaued primarily due to physical limitations. Higher speeds generate more heat and require more power, making it difficult to cool the processors efficiently. Beyond 3–4 GHz, the returns diminish, and further speed increases risk thermal failure. That’s why chipmakers shifted focus to efficiency and multi-core designs.

2. Can CPUs still go beyond 5 GHz today?

Yes, some high-end CPUs can boost past 5 GHz using turbo frequencies. However, this boost is usually temporary and depends on thermal and power constraints. Sustaining those speeds consistently isn’t practical in most use cases, especially in laptops and smaller systems.

3. How do modern CPUs improve performance without higher GHz?

Modern CPUs enhance performance using multiple cores, smarter architecture, larger cache, better instruction prediction, and AI acceleration. These improvements allow better multitasking and faster execution of complex workloads without relying on raw clock speed.

4. Is GHz still important when choosing a CPU?

Yes, but it’s not the only factor. GHz matters for single-threaded tasks, but other specs—like core count, cache size, architecture, and integrated features—play a crucial role. For most users, balanced specs offer better real-world performance than just high clock speed.

5. What replaced clock speed as the new standard for CPU performance?

Instead of focusing on GHz, the industry now values performance-per-watt, multi-core efficiency, instruction-per-clock (IPC), and AI acceleration. These metrics provide a more accurate view of how well a CPU performs in everyday tasks and demanding workloads.